cloud provider route controller

当 pod 的 CIDR 和所属的 node 不属于同一个 cidr 上时候,在部分云上就会有 pod 和 node 网络互通的问题,而 route controller 就是被设计用来创建路由解决这个问题的方案。

如果计划让 k8s 启动的 pod 从指定的 cidr 中分配 ip 常见的方式是通过指定 KCM 的启动参数cluster-cidr来指定 POD CIDR,service 也可以通过 KCM 的service-cluster-ip-range参数指定 CIDR。

cloud provider 启动时候通过判断 AllocateNodeCIDRs 与 ConfigureCloudRoutes 的与逻辑来判断 k8s 的 pod 是否从指定的 cidr 中分配 IP,AllocateNodeCIDRs 字段的意义是 AllocateNodeCIDRs enables CIDRs for Pods to be allocated and, if ConfigureCloudRoutes is true, to be set on the cloud provider.,ConfigureCloudRoutes 字段的意义是configureCloudRoutes enables CIDRs allocated with allocateNodeCIDRs to be configured on the cloud provider.

1 | // startControllers starts the cloud specific controller loops. |

这个 Run 函数保持着 cloud provider 运行 controller 的一贯风格,并没有什么特别要注意的地方。

1 | func (rc *RouteController) Run(stopCh <-chan struct{}, syncPeriod time.Duration) { |

这里地方的实现唯一值得说道的是reconcile的函数命名,在 k8s 的 controller 中讲现实世界变成声明式中的过程称为reconcile。

1 | func (rc *RouteController) reconcileNodeRoutes() error { |

这个是实际 reconcile 的过程,就是找到现实世界和期望世界差距,然后通过 cloud proivder route所提供的接口操作云上的route资源进行添加/删除操作将其变成期望的模样。

1 | func (rc *RouteController) reconcile(nodes []*v1.Node, routes []*cloudprovider.Route) error { |

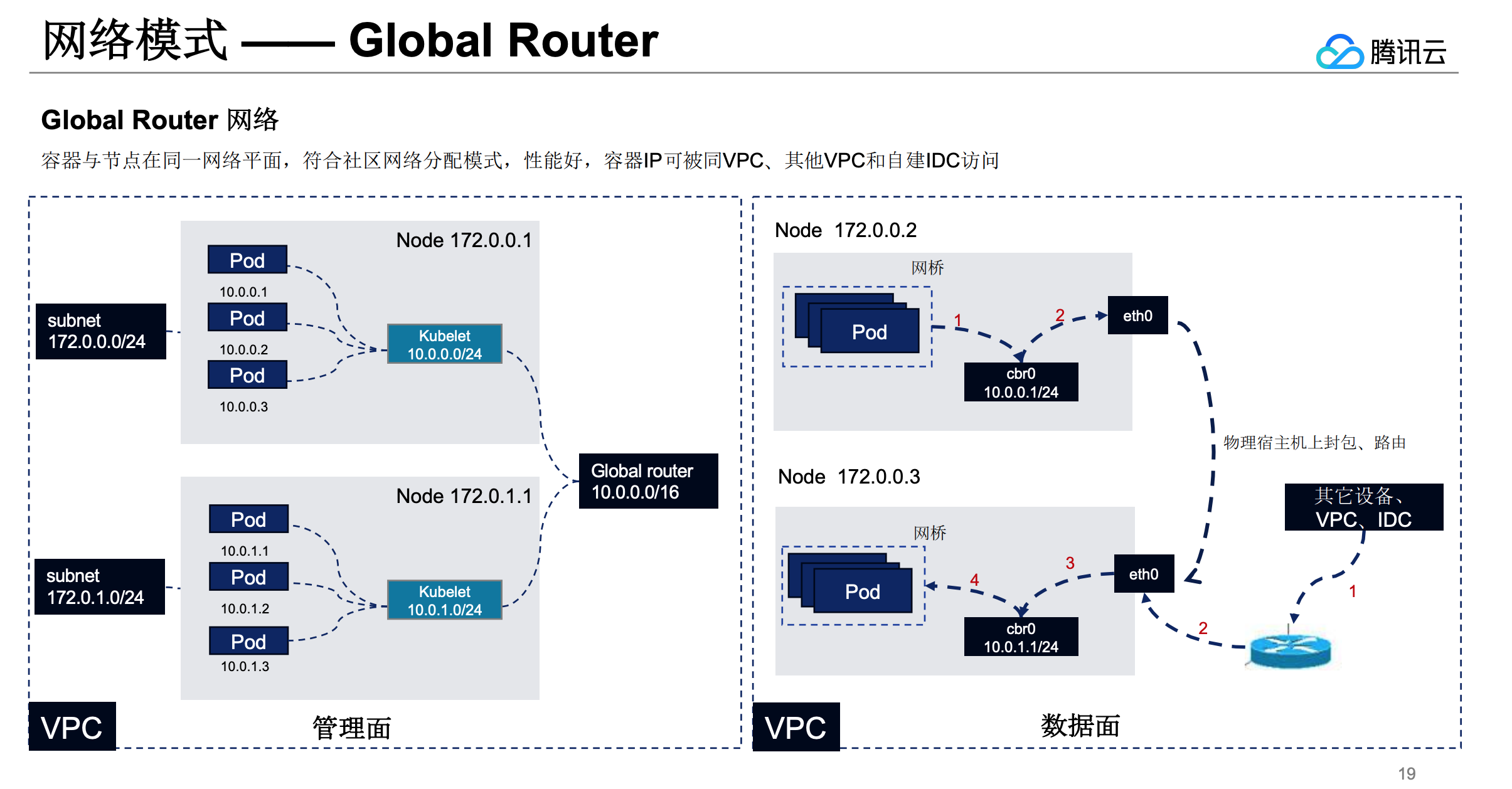

目前腾讯云 TKE的 global router 网络模式就是符合上述描述,在 global router 模式下 route controller 会在节点启动的时候去云上注册路由,下图中节点 172.0.0.1 上 kubelet 上报 ready 时候 route controller 会去 vpc 中注册 10.0.0.0/24 的路由,并且 172.0.0.1 上的 pod 都是从 10.0.0.0/24 的 cidr 分配 IP 的,这样就实现了和 vpc 的互通。